- Introduction

- Digital Systems and the Emergence of Complexity

The origin of human language remains one of the most profound unsolved questions in evolutionary biology. Language and humanity are deeply intertwined, yet their definitions are often vague and interdependent, rendering artificial intelligence-based attempts to define them circular and inconclusive. This paper proposes that human language represents a digital evolution of the analog phonetic communication systems found in mammals. We hypothesize that the emergence and evolution of digital signal syllables drove the complex development of human intelligence, providing a theoretical framework for understanding increasing complexity through low-noise environments and iterative processing. Analog symbolic communication, which has no phonemes, uses the waveform of a call to express the meaning of a symbol and the volume and frequency of the call to modulate a message. It can only share a few dozen symbols and cannot be constructed grammatically. On the other hand, digital signals can combine phonemes to produce an infinite number of symbols. Grammar can combine symbols to make complex messages. Specialized signals for long-term storage, such as letters and DNA, are created, persist, then stored, and widely shared. Eukaryotes produce progressively more complex structures, such as organelles, cells, organs, individuals, groups, and species. Languages are also evolving in more complex, specialized and differentiated directions. It is evolution that makes an analog system into a digital system. When it becomes digital, an even greater leap comes from it.

The term «digital» is commonly associated with binary computing in electronic circuits. However, digital systems extend beyond computing to include biological systems such as RNA and DNA, which encode the information of life (Kay, 2000). Information theory, as articulated by von Neumann (1963), posits that any integer-based system (greater than 1) can form a digital system, with the signal-to-noise ratio serving as a critical factor in its dynamicity and evolution. What matters is not the number of signals, but that sender and receiver share an identical set of discrete units. Phoneme sharing, in this sense, underlies digital human language and communication. We define a digital system, applicable to language, computing, and biological evolution of life, as one that: (i) employs discrete, finite signals arranged in a one-dimensional array to propagate through noisy channels without error; (ii) processes error-free signals via sophisticated and complex circuits in low-noise environments; (iii) (as a result of (i) and (ii)), reproduces complex structures without loss of complexity; and (iv) evolves to increase complexity over time. In this sense, digital systems are inherently self-amplifying, aligning with complex systems theories. While numerous attempts have been made to define phonemes or sets of phonemes, no single definition has been universally accepted. In this paper, we define a set of phonemes as a set of mutually distinctive minimal auditory units shared among members of a language community, which enables the generation of an infinite number of unique word-signs through combinatorial arrangement.

This definition emphasizes the discrete and combinatorial nature of phonemes essential for digital signaling, and highlights their community-level imprinting during infancy, which guarantees finiteness–another key requirement of digital systems. Syllables, as digital signals, exhibit discrete frequency characteristics unique to each language, suggesting that a finite set of phonemes is imprinted in infancy. This imprinting occurs through the reinforcement and pruning of cochlear hair cells in response to native phonemic stimuli during the first year of life (Kuhl, 2004). Furthermore, complex information is conveyed by constructing sentences that combine content and function words according to grammatical rules. In this regard, linguistic sentences, messenger RNA, and data packets in computer networks share a common structure(For detailed comparison, see Table 1): information encoded in one-dimensional sequences of discrete, finite signals (Tanenbaum and Weatherall, 2011). Over time, reactive signals –such as syllables, RNA, and voltage bits – evolved into long-term storage systems, including writing, DNA, and magneto-optical media.

Table 1

Comparison of Digital Signal Systems in language, biology and computing.

| Attribute | Syllable | RNA | Electronic Bit |

| System | Language | Life | Computation |

| Emergence Timing | ~66,000 years ago | ~3.8 billion years ago | ~1950s |

| Signal Medium | Acoustic Energy | Molecular Bonding | Electrical Signal |

| Finiteness | Imprinted Native Phonemes | 4 Nucleic Acids (A, G, U, C) | 2 States (0, 1) |

| Minimum Unit | Syllable (a vowel with consonants) | Codon (64 combinations) | Byte (256 combinations) |

| Network | B-cell/Microglia Network in CSF | TRNA in Cytoplasm | Shift Register in CPU |

| Low-Noise Environment | CSF, Cultivated zones, Monastery, Library | Cytoplasm, Nuclei, CNS, Uterus, | Clean Room, Data Center |

| Linearity | Grammatically Structured Sentences | Messenger RNA | Data Packets |

| Modulation | Grammatical Words | Alternative Splicing, Epigenetics | Protocol Switches |

| Meaning | Memory in Microglia/B-cell Networks in CSF | Protein Function | Device Output |

| Persistent Memory | Writing Systems | DNA | Optical/Magnetic Storage |

| Error Avoidance | Phonemic Redundancy (Sound Symbolism) | Codon Degeneracy (64 codons to 20 amino acids) | Error-Correcting Codes |

To ensure signal fidelity, processing occurs in low-noise environments, such as cultivated zones, academic settings, cellular nuclei, or cooled computational systems. Mechanisms like sound symbolism, codon degeneracy (where 64 codons specify 20 amino acids), and error-correcting codes further enhance signal reliability. The evolutionary leaps non-literate to literate societies can be understood as digital transitions, marked by the evolution of signals from syllables to written characters. The acquisition of bit-like signals has propelled humanity into a new evolutionary stage, further fostering intelligence and civilization. Understanding these digital principles is essential for elucidating this process.

1.2 The Dawn of Digital Evolution of Human Language

Mitochondrial DNA analysis has firmly established the African origin of Homo sapiens during the Pleistocene, a consensus widely accepted in the scientific community (Cann, Stoneking, and Wilson, 1987). However, the ecological and cognitive conditions under which language emerged remain poorly understood. The prevailing «pan-African model» posits that human evolution occurred across multiple regions of the continent, supported by archaeological evidence of settlements and lithic technologies (Scerri and Will, 2023). Yet, this framework has largely overlooked the parallel emergence of language and cognitive capacity. We propose a novel hypothesis: during the Late Pleistocene (= Ice Age), humans developed digital signal syllables through a two-stage phonemic evolution. First, click consonants emerged, followed by the introduction of vowels, culminating in syllables – discrete, finite, and linearly combinable units. The emergence of these digital signals marked the birth of language and enabled the reorganization of neural circuits specialized for processing structured grammar and abstract concepts – mechanisms that remain invisible, enigmatic, and procedurally elusive at the deepest levels of human intelligence.

As evolution builds on pre-existing structures (Deacon and Deacon, 1999), early hominins – upright bipeds using stone and bone tools – gradually accumulated ecological and symbiotic knowledge over roughly three million years. These hominins inhabited the harshly arid and exceptionally flat environment of the Kalahari (Dart, 1959, Van der Post 1958, Owens and Owens, 1984), coexisting with diverse animal and plant species. Around 300,000 years ago, the control of fire enhanced nutritional efficiency and energy allocation. By approximately 130,000 years ago, with the onset of an ice age, early humans began occupying coastal caves. These stable, protected environments prolonged infant dependency, increasing exposure to social stimuli and fostering both curiosity and inquisitive behavior in tandem with expanding brain volume (Martin 1990). These transitions are summarized in Table 2 as milestones in the digital evolution of human communication.

Table 2

Evolutionary milestones in the development of digital signal systems in language, from early tool use to modern forward error correction.

| Period | Innovation | Required Adaptation |

| 3 Ma | Tool Use | Bipedalism, tool use, cranial adaptations. |

| 300 ka | Fire Use | Social gatherings, improved digestion. |

| 130 ka (MIS 5) | Eusociality & Alarm Calls | Cave dwelling, cooperative childcare, sound imprinting, expanded brain volume. |

| 74 ka (MIS 4) | Vocabulary | Click consonants during Toba volcanic winter; Still Bay culture. |

| 66 ka (MIS 3) | Grammar | Mental protuberance, laryngeal descent; vowels and syllables initiated digital evolution; Howiesons Poort culture. |

| 5 ka | Writing | Writing systems in Mesopotamia enabled persistent syllables, fostering civilization. |

| 2.5 ka | Conceptual Thought | Philosophers/scientists in quiet environments (e.g., academies, monasteries) developed abstraction. |

| 1950s | Bidirectionality | Electronic signal bits enabled searchable, interactive communication (e.g., databases). |

| 21st Century | Forward Error Correction (FEC)* | Ultra-low-noise environments (e.g., AI data centers, reclusive settings) support FEC and interdisciplinary integration of concepts. |

MIS: Marine Isotope Stages

* FEC is a technique to correct errors without contacting information senders.

The involvement of grandparents in childcare likely facilitated the emergence of extended family structures, deepening intra-group communication and knowledge transmission. Cave-dwelling populations began to exhibit eusocial behavior, employing community-specific alarm calls to distinguish between allies and adversaries (Wilson, 2012). Against this evolutionary bacdrop, the Toba supereruption (~74,000 years ago) and the ensuing volcanic winter imposed extreme environmental pressures (Ambrose 1998), potentially catalyzing further vocal, cognitive, and social adaptations.

- Hypotheses

2.1 Emergence of Consonants

Around 74,000 years ago, the Toba supereruption triggered a period of global cooling lasting approximately 6 to 10 years, reducing average temperatures by 3–5°C. This dramatic environmental stress confined early human populations to caves, where food scarcity and social isolation intensified the need for vocal communication. Within these enclosed communities, shared alarm calls – originally used to distinguish allies from outsiders – evolved into shorter, recombinable vocal units. These mimetic vocalizations, which initially signaled group membership, gradually transformed into discrete sound segments capable of forming referential labels for natural elements, emotional states, and social actions – particularly through communal activities such as singing. This transition, possibly rooted in playful naming practices and environmental reverence, marks the hypothesized origin of consonantal phonemes, which helped construct a shared symbolic worldview among group members. Click consonants, still preserved in Khoisan languages of southern Africa, likely represent remnants of this early phonemic stage, encoding names for landforms, flora, and fauna. Archaeological findings from Blombos Cave in South Africa – a representative site of the Still Bay industry (~71–72 ka) – support this cultural and cognitive expansion, pointing to the emergence of symbolic artifacts and an enriched vocabulary (Henscilwood et al., 2001). While some researchers interpret mitochondrial DNA patterns (e.g., specific SNPs) as evidence of a population bottleneck, we propose an alternative view: this period reflects a linguistic innovation – the emergence of proto-languages. Consonants, particularly clicks, relied solely on tongue or lip movements and were independent of the larynx or vocal cords. Their lack of grammatical function further supports the hypothesis that consonants preceded vowels in the evolution of phonemic structure (Westphal, 1971, Traill, 1997).

2.2 Emergence of Vowels

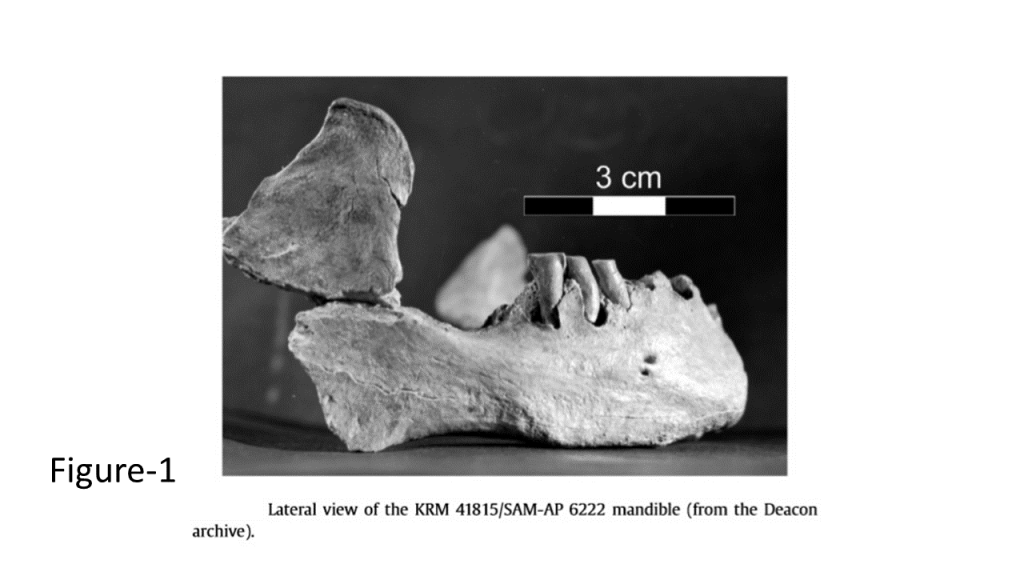

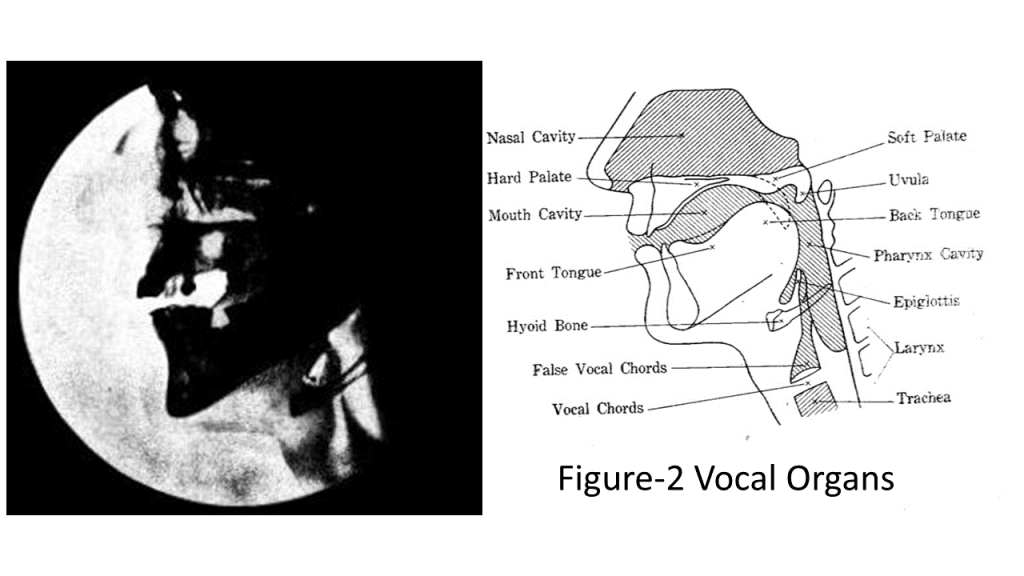

Around 66,000 years ago, as the climate warmed, anatomical adaptations enabled the production of vowels. The development of the mental protuberance – observed in fossils such as KRM-41815 (Figure 1) from Klasies River Mouth Caves, representative of the Howiesons Poort industry–facilitated laryngeal descent and the formation of a vocal tract optimized for vowel resonance (Singer and Wymer, 1982, Rightmire and Deacon, 1991, Morrissey, Mentzer and Wurz, 2023). Repetitive tongue movements associated with click consonants likely contributed to the development of the uniquely human mandible, shaping a vocal tract with balanced horizontal and vertical dimensions, thereby enhancing formant tuning (Deagling, 2012). The robust and shortened mandible, originally adapted in early humans for consuming their staple food in the Kalahari–herbivore bones – later contributed to enhanced vowel resonance capacity, enabling more distinct and stable syllable production. Despite the increased risk of asphyxiation due to laryngeal descent, the retention of this adaptation suggests strong selective pressure–possibly driven by an instinctive urge for linearized expression or the emergence of a second digital system of intelligence, following the first digital system: the genetic code of life. This transformation may reflect an unconscious recognition – embedded in the logic of life – that language and intelligence have begun to evolve as a second digital system, following principles similar to the genetic code, in which discrete sequences encode and express information. X-ray imaging by Chiba and Kajiyama (1941) provides physical evidence of this mandibular adaptation (Fugure-2). The integration of vowels with consonants produced temporally segmented syllables – phonological units that support linear structuring through grammatical connections. Since then, all known human languages have relied on syllables as fundamental speech units: discrete, finite, and one-dimensional, fulfilling the criteria of digital signals.

2.3 Digital Systems Dependent on Low-Noise Environment

We communicate using sentences built from syllables. In this process, the sequence of syllables spoken by the sender is transmitted to the listener without a single error. This is possible because syllables are discrete and finite signals; words have strong phonological representations; and our brains are equipped with native-language phoneme maps that enable the recovery of correct sounds even under variation (see Section 2.4). When the listener receives a sentence without error, meaning is automatically recalled. However, the mechanisms through which meaning is recalled –a nd what kind of meaning is recalled – have rarely been studied. For example, everyday utterances such as “What do you want to eat?” or “Where do you want to go?” may invoke mechanisms very different from those required to understand abstract scientific concepts like post-translational modification in molecular biology or entropy in thermodynamics. How are such invisible phenomena in science correctly understood? This paper does not delve into such mechanisms, but emphasizes the importance of quiet environments, where both speakers and listeners can carefully, slowly, and repeatedly reflect on each word. John von Neumann (1963) defined digital systems to reproduce complexity without loss and to be able to evolve complexity over long periods, and to understand them signal-to-noise (S/N) ratio is the most important theory. This point connects language evolution to a broader biological trend: biological evolution can be seen as a series of breakthroughs in noise reduction, particularly during massive extinction events that gave rise to structures like the nuclear membrane, CNS, cerebrospinal cavities, and the uterus. After environmental crises, these internal spaces functioned as incubators for high S/N signal environments, permitting exponential increases in internal complexity: (Table-3)

Table 3

Biological evolution as a sequence of noise-reduction breakthroughs, with subsequent logical complexity growth after environmental recovery.

| Period | Physical Mutation for Noise Reduction | Logical Evolution toward Complexity after Environmental Recovery |

| 2 billion years ago | Nucleus | DNA double helix: restriction enzymes; post-translational modification; endosymbiosis with bacteria |

| 540 million years ago | CNS + Ventricles | Sensory organs: motor control; spinal reflex: symbiosis with multiple cells/organs including neural cells/CNS |

| 66 million years ago | Uterus | Umbilical cord: precise growth control; vocal communication; (eu)social group living symbiosis |

In humans, a parallel occurred: syllables, letters, and bits were born for specific purposes – identifying friends and foes, managing land ownership and taxes, or racing to develop missiles. These digital signals, when used in low-noise environments such as caves, cultivated settlements, monasteries, schools, or seclusion, enabled the emergence of grammar, abstract concepts, and forward error correction. (Table-4)

Table 4

Language evolution toward complexity in low-noise environments.

| Period | Signal Evolution (Property) | Logical Evolution toward complexity in Low-Noise Environments |

| 66 KA |

Syllables | In cultivated lands safe from predators, humans switched from binaural to monaural hearing of speech, repurposing directional auditory nuclei for grammatical vector processing. |

| 5KA | Letters = Persistent syllables |

In monasteries or academies, isolated from worldly duties, people engaged in deep reading and reasoning, acquiring abstract conceptual operations. |

| 60 YA | Bits = Interactive syllables |

With computer networks and AI search functions, scientific concepts can be traced to rejuvenating origins, enabling forward error correction and shared cognitive genome. |

Remarkably, this adaptation was environmental rather than neural, creating a structural asymmetry akin to an evolutionary “original sin”: language became digital, yet our brain circuits remained analog and reflexive. The brain did not evolve new architectures to accommodate the complexity of digital language. Instead, humans reshaped and engineered their environments to reduce noise and improve the signal-to-noise ratio—in other words, once a new signal emerged through physical evolution, it became necessary to seek out quieter environments where that signal’s potential could be fully realized. Human cognitive evolution may only advance in this way.

2.4. Phonemic Maps and the Immune-Based Network for Meaning Reconstruction

Before exploring the internal mechanisms of phoneme representation and memory formation, it is essential to recognize the limitations of reflex-based language processing. The table below summarizes the weaknesses of the sign-reflex system, which remains embedded in the brainstem circuitry.

Table 5

Weaknesses of sign-reflex mechanisms when applied to language processing

in intelligence systems.

| Aspect | Weakness |

| Involuntary System | Lacks mechanisms to form new word memories, associate them with appropriate meanings, or control reactions. |

| Recognition System | Easily overlooks new or unfamiliar signs. Tends to disregard the significance of unfamiliar information. |

| Ego-Centric Tendency | Self-affirming with no provision for self-diagnosis or introspection. |

| Ignorance of the Mechanism of Meaning | Not knowing that different people have different meanings. |

| Unsuspecting Nature | No mechanism to verify the authenticity of incoming stimuli. |

| Reflexive Behavior | Reacts immediately without thoughtful consideration. |

| Stubbornness | No capability for circuit reconfiguration or error correction. |

| Difficulty in Reconfiguration | Cannot dynamically update, reconfigure or refine language processing rules based on new experiences. |

| Inability to Handle Abstract Concepts | Inability to get out of the habit of associating words with episodic memory and build pure abstractions |

| Empiricism | Inability to calmly observe and judge new situations under the decisive influence of precedents |

| Formalism | Feel secure when words and meanings are connected, and do not ask whether the meaning is correct |

| Passivity | Lacks genuine or spontaneous intellectual curiosity. |

| Survival-First Instinct | Prioritizes self-preservation over truth, making it susceptible to coercion. |

| Selective Truth-Telling for Ingroup Benefit | Information is filtered based on social boundaries: truth is told to in-group members, while falsehoods or silence are used toward out-group individuals. |

| Single-Sign Limitation | Struggles to process complex systems or multiple signs simultaneously; Requires reference models. |

(Author’s Original)

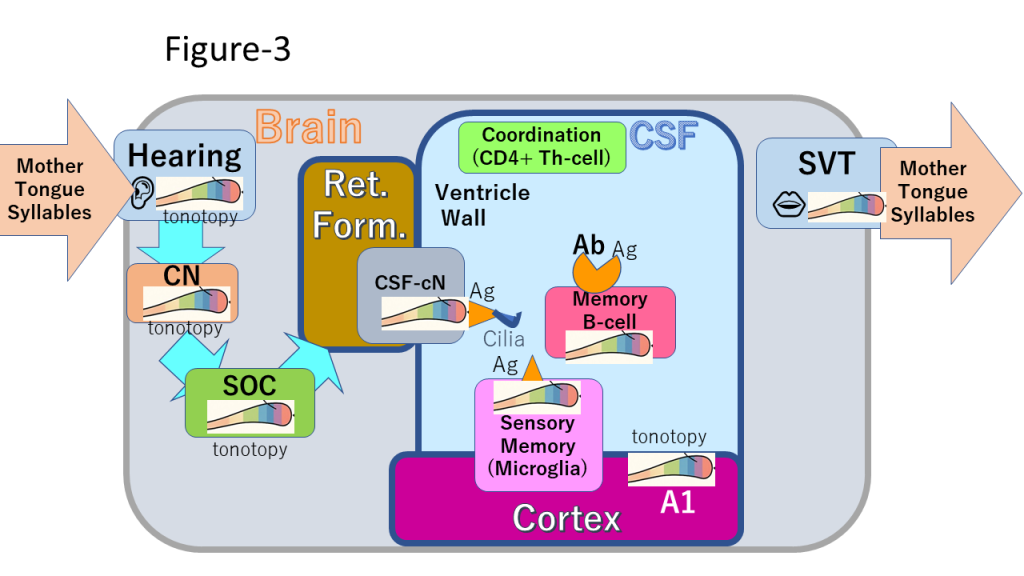

The ability to withhold or distort truth based on social context – particularly in distinguishing friend from foe – is not merely a cultural artifact but a biologically ingrained reflex. As eusocial animals, humans are evolutionarily wired to modulate signal fidelity based on group boundaries. This reflex, rooted in ancient survival mechanisms, persists beneath the symbolic surface of modern language, contributing to the asymmetry between digital expression and analog brainstem control. These limitations highlight the necessity for higher-level systems – such as phonemic maps and CSF-based memory circuits – to support meaning mechanisms including grammatical language and abstract thought. We hypothesize that infants acquire native phonemes through the selective reinforcement and pruning of cochlear hair cells. Auditory stimulation from caregivers activates hair cells tuned to native phoneme frequencies, while non-responsive cells are eliminated. This process creates a phonemic map extending from the cochlear nucleus to the auditory cortex (Figure 3). Unlike traditional models that emphasize cortical plasticity, this framework proposes that foreign accents and dialects are perceived through native phonemic filters, without requiring higher-level cortical inference. By filtering auditory input through pre-established phonemic templates, the system ensures robust and efficient recognition of digital signals.

We further propose a novel hypothesis: the existence of an intraventricular immune network involved in language processing. Specifically, word representations are mediated by B lymphocytes circulating in the cerebrospinal fluid (CSF), forming a dynamic network of antibodies and microglial antigens within the brain’s ventricular system. This hypothesis was supported by anecdotal reports shared at a regional neuro-rehabilitation study group employing the Bobath approach, in which cases of progressive aphasia and dyslexia were observed following normal pressure hydrocephalus (NPH) shunting. We hypothesize that shunting leads to a depletion of B cells in the CSF, contributing to the gradual onset of aphasia. The simultaneous progression of aphasia and dyslexia suggests that B cells not only store vocabulary memory but also generate and maintain logical memory representations – such as binary operators like “O” and “X” – for visually processed character strings. In this sense, literacy may depend on B-cell-mediated memory, supporting the principle that «without vocabulary, there is no literacy»: unfamiliar words lack corresponding B-cell networks. These network terminals are shaped by the frequency characteristics of native phonemes and form three-dimensional antigenic structures corresponding to the tonotopic maps established in the cochlear nucleus. These structures engage in localized antigen–antibody interactions, transforming phonological inputs into immune-encoded memory networks. This mechanism offers a new explanation for why foreign vocabularies are interpreted within the framework of native phonemes: the phonological signal activates immune network terminals aligned with native frequency domains. In contrast to corticocentric models that emphasize Broca’s and Wernicke’s areas, this hypothesis foregrounds subcortical, immune-mediated circuits for language processing. Functioning as a reflex-like system, this circuitry may distinguish native phonemes as indicators of social affiliation or threat – reflecting an evolutionary legacy rooted in eusocial auditory communication.

- Writing: Syllables That Persist

Evolutionary biologist John Maynard Smith noted the inherently digital nature of language, suggesting that syllables evolved into letters, which in turn enabled electronic storage and transmission systems (Maynard Smith and Szathmáry, 2000). Although human language is thought to have emerged in South Africa around 66,000 years ago and gradually spread across the globe, it remained unwritten for over 60,000 years. Writing first appeared in Mesopotamia approximately 5,000 years ago, in the vast fertile plains formed by sedimentary deposits from continental collisions. Dynasties governing these expansive territories likely developed writing systems to record ownership and taxation, creating a shared memory infrastructure that extended beyond individual cognitive limits. It was writing that served as the catalyst: it enabled the intergenerational transmission of accumulated knowledge, thereby giving rise to civilization. Unlike ephemeral speech, written characters persist across time and space, necessitating formal educational institutions for their acquisition. Schools, which also originated in Mesopotamia, were developed to teach reading and writing. Writing gave birth to civilization, not vice versa. Once initiated by writing, civilization advances through the refinement of orthographic systems, supported by laws and cultural policies that ensure societal continuity.

Civilization encompasses educational institutions (schools, academies), teacher training programs, professional educators, literary critics, scholars, and public intellectuals. It also encompasses archives and libraries dedicated to the preservation of documents, including the librarians and clerks who manage them, as well as the publishing industry, bookstores, and secondhand bookshops. Civilizations maintain literary and historical traditions, produce dictionaries to standardize language, and foster translation efforts that integrate foreign literature and philosophy into native linguistic frameworks. In this way, civilization progresses as a collective endeavor sustained by those who preserve tradition and contribute to intellectual discourse. Writing-driven civilization accumulates intellectual output in low-noise environments such as monasteries or academies, where conceptual thinking first emerged. Unlike reflexive word use based on one-to-one associations, conceptual thought operates through a one-to-all logic, as described in Piaget’s (1947) additive and multiplicative operations on groups. This shift – from connecting words to individual memories to associating them with sets of memories – marks the second digital leap beyond grammar: the emergence of concept formation.

- Bits: Interactive Syllables

Vannevar Bush (1945) proposed the concept of MEMEX – a machine designed to help humans organize and connect knowledge across disciplines. This vision inspired Douglas Engelbart, whose work at the Augmentation Research Center laid the foundation for the Alto personal computer, developed at Xerox PARC (Palo Alto Research Center) in 1973. That machine introduced many features we now take for granted: graphical user interfaces, the mouse, text editors, spreadsheets, copy-and-paste functions, email, and even the PDF format (Bardini, 2000). These innovations were not merely for convenience. Their original goal was to augment human thought – enabling us to collect, manage, and evaluate knowledge across fields. This vision advanced with the advent of the World Wide Web (1989), online bookstores like Amazon (1994), and search engines such as Google (1998), all of which brought Bush’s idea closer to reality by making linguistic and scholarly knowledge globally accessible. This digital transformation marks the third major step in the evolution of language: from syllables spoken in real time, to written letters that persist across time, and now to interactive bits. Spoken language connects us in the present moment. Writing allows us to reach across generations – but only if we find the right book and take time to read it. Bits, in contrast, enable instantaneous search, retrieval, and interaction. They render language dynamic and computationally searchable. However, digital information introduces new challenges. In noisy environments – where signals are easily distorted–we must ensure that an author’s original intent is preserved. Just like digital communication systems, we require mechanisms for verifying authenticity. One key principle: a document published while the author is alive is more likely to represent their true intentions. After death, the risk of distortion, misinterpretation, or even forgery increases – demanding careful scrutiny by readers.

In the 13th-century Japan, the Buddhist scholar-monk Dōgen (1200–1253) took deliberate measures to prevent his disciples from altering or fabricating his writings. In his 75-volume Buddhist text Shōbōgenzō (Treasury of the True Dharma Eye), which served as an introductory textbook on Buddhist terminology, he numbered the volumes sequentially and added colophons to each, recording the location and date of both his lectures and the final transcription (Tokumaru, 2018). In the colophon of the first volume, he noted that the editorial work had begun in the year before his death, thereby signaling that the text represented the culmination of his teaching. Likewise, in his ten-volume Eihei Kōroku (Dogen’s Extensive Record, 1252/2010), he concluded each volume with identifying marks specifying the number of sermons and verses it contained – effectively embedding a checksum-like system to discourage unauthorized additions or deletions. It is noteworthy that Dōgen, in his Chinese poetry, twice alluded to the concept of redundancy in information theory through the metaphor of “Frost is added to the snow.” This suggests his intuitive grasp of redundancy based error correction systems (Dharma Hall Discourse 473 and 507).

These colophons and identifying markers may represent the earliest known use of error-correction codes in human intellectual history. When an author’s authentic voice can be verified, a regenerative approach to reading becomes possible. This method treats the reading process as a form of source error correction–reconstructing the author’s experiments and insights through their writing. Even if the author made mistakes, the reader can independently identify and correct them. This self-corrective method mirrors the principle of Forward Error Correction (FEC) in communication systems. Bits thus function like persistent, addressable syllables. They can be read and reinterpreted – not only by humans, but also by search engines and AI systems. In this sense, every human today gains the unprecedented ability to “converse” with the minds of past scientists, philosophers, and thinkers. This is not merely a technical shift; it transforms the scale of human cognition.

As in signal theory, clarity depends not only on the strength of the message, but also on the surrounding noise. The more precise and less distracted the environment, the better we can understand and refine complex ideas. Reading slowly, returning to passages, and creating quiet spaces–these are the timeless techniques for increasing signal clarity. Interdisciplinary research, too, resembles tuning across frequency bands. Each field speaks in its own codes, with unique assumptions and symbolic systems. To integrate diverse sources of knowledge, we must first define scientific terms clearly, avoiding unnecessary jargon; examine whether they exhibit the properties of a mathematical group; and confirm that they emerged from the recognition of previously unacknowledged phenomena, by revisiting the original works and insights of the scientists who named them. Paradoxically, the very tools designed to amplify human thought now drown it in noise. We live in a world of constant interruptions, multitasking, and digital distractions. Though our tools are more powerful than ever, they are most effective only in environments of quiet focus. Perhaps the true challenge of the digital age is not technical, but ecological: creating the silence necessary for thought (Carr, 2011).

- Hypotheses Development

The hypotheses presented above emerged through a long-term integrative process, synthesizing insights from evolutionary biology, field linguistics, anthropology, archaeology, psychology, animal ecology, and digital communication theory. Rather than appearing fully formed, the core hypothesis of digital language evolution developed gradually–through iterative reconsideration of empirical data, cross-disciplinary reasoning, and keyword-driven exploration of overlooked biological structures and theoretical models, including Jerne’s immune network theory and Vigh’s CSF-contacting neurons (Jerne, 1974, 1984, Vigh et al, 2004). Our initial speculation centered on the possibility that Late Pleistocene cave habitation facilitated vocal communication. Caves offered safe, sound-shielded, dark, and acoustically reverberant environments. These conditions suggested that sound–more than gesture—could serve as a reliable and far-reaching signaling modality. A key turning point came with comparative studies of eusocial animals–especially the naked mole rat (Heterocephalus glaber), which recognizes kin through olfactory cues, coordinates behavior cooperatively, and employs only 17 distinct acoustic signals (Pepper, Braude, Lacey, and Sherman, 1991).This realization was profoundly disorienting: darkness, it seemed, does not foster vocabulary. The long-held notion that dark environment in the cave alone drives linguistic richness was cast into doubt. In this conceptual vacuum, a new idea began to emerge – that the syllable itself, as a discrete acoustic unit, may represent the true minimal building block of language. Through the recombination of these units, an open-ended inventory of word-signs becomes possible. A digital breakthrough–sparked by a moment of insight. We propose that syllables evolved from eusocial alarm calls into temporally discrete digital units. In eusocial species, the ability to distinguish between kin and non-kin–despite similar appearance–is vital for survival. While many species rely on scent, humans evolved to use sound alone. This auditory reliance, likely shaped in cave environments, gave rise to syllables as temporally discrete, combinable units–forming the foundation of digital language. Between 2009 and 2012, we searched extensively for cellular and molecular structures in the cerebral cortex that could account for language memory, but found nothing. Drawing upon Jerne’s theory of immune networks, we began investigating the possibility that language memory is not confined to cortical circuits but also embedded inneuroimmune structures within the cerebrospinal fluid (CSF). This inquiry led to the formulation of the intraventricular neuroimmune network hypothesis, which posits that word representations are stabilized through interactions among CSF B cells, their antibodies, CSF-contacting neurons, and microglial antigen-presenting networks.

Inspiration also came from earlier thinkers. Von Neumann (1963) foresaw a link between information and thermodynamics, and focused on signal-to-noise ratio in digital systems. Alongside Piaget’s (1947) group theory of cognition and Maynard Smith’s (1999) signal evolution model (syllables → letters → bits), these ideas helped form the foundation of our hypothesis. Table 6 outlines the intellectual trajectory of these ideas, shaped through long-term dialogue and reflection. Key developments emerged from feedback at international conferences, workshops, and study groups hosted by IEICE, IPSJ, and JSAI, as well as symposia we organized for the Japan Society for Evolutionary Studies (JSES in 2020 “Evolution of Modern Human: The Localities of Intellectual Mutation” and 2022 “Information Theory of Evolution”) and the Japan Society for Cognitive Science (JSCS in 2022 “Refined Human Knowledge Genome”). Each step marked not just conceptual expansion, but also a refinement of our understanding of digital language’s saltative evolution and its underlying subcortical neurobiological circuits.

Table 6

Timeline tracing the conceptual development of the digital language evolution hypothesis, based on fieldwork, interdisciplinary research, and conference presentations.

(italics: main hypotheses; bold: key hypotheses).

| Year | Development |

| 2007 | Field visit to Klasies River Mouth Caves, sparking interest in language and human origins. |

| 2008 | Rejection of cave-based evolution; formulation of digital language hypothes is inspired by Jerne’s immune network theory. |

| 2010 | Two-stage phoneme evolution: click consonants (Still Bay) to syllables (Howiesons Poort). |

| 2011 | Analysis of digital networks: physical layer (acoustic signals) vs. logical layer (neural circuits) based on the Internet Layer Analysis tool: OSI Reference Model. |

| 2012 | Hypothesis of language processing via CSF B cells, microglia, and CSF-contacting neurons in the brain ventricle.2nd visit to KRM |

| 2013 | Introduction of Forward Error Correction (FEC) for Scientific Concepts, integrating interdisciplinary insights. Two poster presentations at ICL-19 (Geneve) |

| 2014 | Monaural mother-tongue hearing and vector processing of grammatical syllables via reflex circuits. |

| 2016 | Three-stage digital evolution: syllables → writing → bits; presented at ICLAP2(Lahore, Pakistan) as “Logical Linguistics: Bricolage and Breakthrough”. |

| 2020 | Concepts as mathematical groups; phoneme sharing from eusocial alarm calls. |

| 2021 | Ultra-low-noise environments for FEC; development of linguistic information reception procedures exploiting bibliographic data and regenerative reception. |

| 2022 | Literacy memory in CSF B-cell networks, inspired by anecdotal reports of progressive aphasia and dyslexia post-NPH shunting |

| 2023 | Visit to KRM and Maropeng center for the Cradle of Humankind. Realized the evolutionary continuity between early hominins and modern humans |

| 2024 | 3. PSSA22 Presentations:Kalahari origin of Bipedalism, Fossil mandible KRM-41815 is the evidence of digital evolution of human intelligence.(Graff Reinet, S.Africa.) |

| 2025 | Phoneme emergence from eusocial alarm calls in Late Pleistocene South Africa; phoneme map imprinting via tonotopic cochlear nucleus pruning with mother tongue stimuli reinforcement during early language exposure. |

- Hypothesis Testing

4.1 Evolutionary Sequence: Clicks Before Syllables

As part of testing the proposed hypothesis of syllables evolving from alarm-based communication systems, we examine the possible precedence of click consonants over syllables in human evolution. We hypothesize that click consonants emerged before vowels and syllables, as they can be produced without pulmonary airflow or a descended larynx. Their rarity in grammatical function words and limited integration into coherent phonological systems (Westphal, 1971, Traill, 1998) suggest a pre-grammatical origin. Once syllables evolved, populations who migrated out of Southern Africa relied exclusively on syllables, while Khoisan languages retained clicks in lexical words — possibly because the surrounding world continued to be named with clicks. This pattern supports the view that clicks preceded syllables and have been retained primarily in southern African languages.

4.2 Writing and Bits as Indelible and Interactive Syllables

We hypothesize that written letters and digital bits serve as persistent and interactive carriers of syllabic information. Unlike spoken syllables, which depend on immediate shared context, written and digital forms are crafted with unknown readers in mind. These forms are structured to carry meaning reliably across time, allowing it to connect meaningfully with the reader’s memory and understanding–without relying on arbitrary interpretation. To test this hypothesis, controlled recall and recognition experiments can be conducted using identical syllabic content presented in spoken form, written on paper, and via digital output devices such as a screen or a speaker. Measures of retention accuracy, recall latency, and interpretive stability over time may reveal whether written and digital syllables function as durable extensions of oral linguistic units. If confirmed, this would support the view that the evolution of literacy – here understood as the formation of visual contact-based memory in individual B cells in response to the visual input of written letters – and of digital media represents the second and third digital leaps beyond spoken syllables, transforming language from an evanescent signal into a persistent, computationally searchable, and electrically transmittable form.

4.3 S/N Ratio, Bit-energy and Conceptual Processing

We hypothesize that conceptual learning and forward error correction (FEC) in human cognition depend on a biologically tuned signal-to-noise (S/N) threshold. Comparative experiments conducted under varying environmental conditions (e.g., classrooms, noisy settings, or quiet library spaces) can help determine the critical S/N level required for stable abstraction and error-resistant learning. However, S/N alone may not fully account for the dynamics of language processing. Drawing on digital communication theory, we also consider the importance of bit energy per noise spectral density (Eb/N₀), where R (bit rate) reflects the processing speed or syllabic tempo of linguistic input. A rapid influx of information (high R) may dilute the bit energy, reducing interpretive reliability even under high S/N. Moreover, iteration- repeated exposure to the same linguistic content–functions as a cognitive amplifier, enabling convergence on intended meaning through successive approximations. This suggests that high S/N, optimal Eb/N₀, and iterative reinforcement together form the physiological basis of robust conceptual processing.

4.4 CSF B Lymphocytes and Verbal Memory

We hypothesize that B lymphocytes floating in the cerebrospinal fluid (CSF) play a central role in verbal memory formation. When new symbol (conditioned stimulus: buzzer, bell, metronome, etc.) is heard and followed by food or poison introduced into the mouth, salivation occurs at the mere hearing of the conditioned stimulus. This is the beginning of a conditioned reflex experiment. At this time, a pair of specific antigen and antibody corresponding to the envelope of the speech waveform of the word are produced. The antigensare expressed on microglia, coding for multimodal sensory memories, and CSF-contacting neurons (CSF-cNs), which become activated by afferent signals at the ventricular wall. Antibodies, in turn, are expressed on the membrane surfaces of B cells floating within the CSF. Each antibody consists of three amino acid modules, namely Complementary Defining Regions (CDRs), each of which can also function as an antigen. This property allows B cells to interact not only with CSF-cNs and microglia but also with other B cells, thereby forming a decentralized, distributed network. We propose that this dynamic, immune-based memory network underlies conscious access to verbal representations. To experimentally test this hypothesis, we suggest the following three approaches:

(1) Experimental Induction of New Verbal Memory

Using proper nouns (e.g., names of foreign mountains, river or historical figures) unfamiliar to participants, we can examine memory formation. Participants read aloud the name 10–20 times daily over 3–5 consecutive days, with or without writing string of characters, and/or receiving a 1-minute explanation about the associated referent. After one, three and five days, they are asked whether they can spontaneously recall and articulate the name and explain the referent’s significance. Long-term retention is then tested at one month, six months, and one year. A parallel experiment on episodic memory could involve unfamiliar foreign dishes (e.g., haggis, thieboudienne). Participants sample the food over 3–5 consecutive days and are later asked to recall the dish’s name and describe it in their own words, with follow-up tests for retention at the same intervals.

(2) Recognition of Literacy Memory

Participants are presented with around 10 or 15 familiar food or beverage names, such as sandwiches, sushi, omelet, barbecue, spaghetti, etc. rendered in unfamiliar scripts (e.g., Chinese characters, Cyrillic, Thai, Hebrew, Hangul, etc.). They are explained just once or twice to associate each character string with its meaning in their native language. Recognition is tested a few hours after, the following day, one week later, and again at one month, six months, and one year to assess the stability of literacy memory mediated by B cells.

(3) Clinical Correlation Between CSF Loss and Aphasia

(a) Statistical Analysis: Compare incidence rates of aphasia and alexia between neurosurgical patients with and without CSF loss or blood–brain barrier disruption.

(b) Rehabilitation Protocol: For patients who have lost the names of familiar items (e.g., foods), provide repeated exposure to pictures or real objects, paired with verbal repetition of the name (10–20 times per session over 3–5 days). Recovery of naming ability would be used as a measure of verbal memory cell regeneration.

- Implications

5.1. Missing Links with Pre-Languages

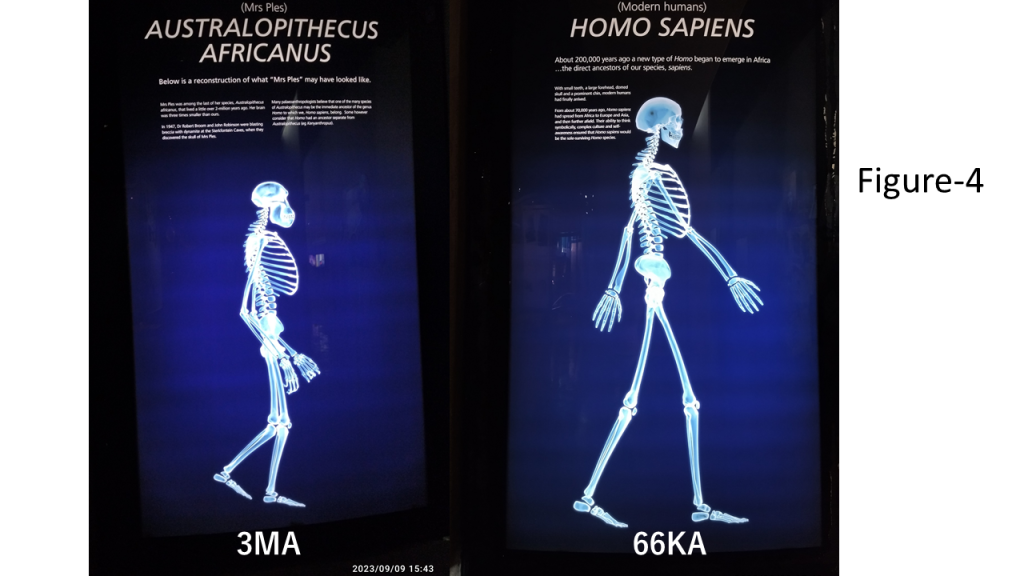

A pivotal evolutionary development in the emergence of modern humans was the transformation of the mandible, which enabled the formation of a vocal tract capable of producing vowel resonance and digital syllables–key components of human speech. Our 2007 visit to the Klasies River Mouth Caves first alerted us to the digital structure of human language (3. Hypotheses Development), prompting investigation into how signals propagate between individuals and are processed by complex neural circuits in the brain. Hypotheses regarding the origin of phonemes further crystallized following our visit to the Maropeng Centre in South Africa in 2023. There, we were struck by the morphological similarities between Australopithecus africanus and modern humans (Figure-4) – similarities that had been noted by Raymond Dart and later supported by the taxonomist Ernst Mayr, who classified them within the same genus (Dart 1959). These observations led us to hypothesize that early bipedal hominins in the extremely flat Kalahari, already using tools and fire, began to inhabit caves during the Ice Age and gradually acquired syllables. Human evolution, we propose, unfolded both in the expansive plains of the Kalahari and in the large coastal caves at the southern tip of Africa. While early hominins in the Kalahari likely communicated through non-verbal or pre-linguistic means for millions of years, the emergence of syllables marked a sudden and unprecedented leap – a digital discontinuity in the evolutionary timeline.

5.2. Roadmap for Digital Evolution

Because syllables function as digital signals, the invention of writing enabled linguistic information to be stored, transmitted across generations, and disseminated across space, thereby giving rise to complex civilizations. This progression–from syllables as discrete oral signals, to writing as persistent representations, and finally to digital bits as interactive signals–constitutes a three-stage digital evolution of human language. Today, digital bits – interactive, addressable (uniquely locatable), and searchable (logically retrievable) – represent the next phase in this signal evolution. If properly mastered, this signaling system may enable yet another leap in human intelligence, potentially surpassing the complexity achieved in previous stages by several orders of magnitude. We further speculate that genetically guided behaviors – such as a child’s transition to monaural listening of the mother tongue around age three, or the strong interest around age ten in puns, riddles, and rule-breaking exceptions – may represent innate, genetically programmed developmental milestones in acquiring digital language processing for grammar and concepts. These hypotheses warrant systematic investigation through developmental psychology and educational research.

5.3. Language Processing by CSF B Cells

The hypothesis that language processing involves an intraventricular immune network introduces a novel neurobiological framework. It suggests that language memory is mediated not only by cortical glial circuits but also by subcortical structures – particularly the cochlear nucleus, which is attuned to the phonemic features of the mother tongue – and by immune networks within the cerebrospinal fluid (CSF), including B lymphocytes. This model suggests that stable phonemic encoding and consistent word recall may depend on B cell interactions at the CSF–brain interface. Accordingly, certain forms of aphasia may result from the depletion of B cells in the CSF, a possibility that warrants empirical investigation. Furthermore, it is important to examine whether CSF shunting in patients with normal-pressure hydrocephalus (NPH) contributes to immune cell loss, potentially leading to progressive aphasia and dyslexia. If validated, these findings would warrant the development of novel rehabilitation strategies grounded in the CSF immune network framework.

5.4. Archaeological Context and the Need for Reappraisal

The hypothesis of syllable-based language evolution is grounded in South African archaeological sites, such as Blombos and Klasies River Mouth, which provide critical evidence of early symbolic behavior and phonemic development. However, the global recognition of these sites has been limited, partly due to historical isolation during the 1960s–1990s academic boycott of South Africa. Despite recent UNESCO inscriptions (e.g., Pinnacle Point), older sites with richer fossil and cultural records remain underexplored (ICOMOS, 2024). Reintegrating these findings into global discourse is essential for understanding the digital evolution of language and human cognition.

- Conclusion

This paper proposes a two-stage model for the evolution of phonemes during the Late Pleistocene. Around 74,000 years ago, in the cold aftermath of the Toba supereruption, click consonants emerged as segmented, reflex-compatible alarm signals. Approximately 66,000 years ago, the development of the mental protuberance enabled vowel production, giving rise to syllables – discrete, finite, and temporally structured digital units. Writing systems appeared around 5,000 years ago, and binary bits in the mid-20th century, marking the transformation of speech signals into persistent and interactive forms. This trajectory is best understood as a case of digital evolution within the broader framework of complex systems theory. Digital evolution has markedly increased cognitive demands on individuals. The saltative biological transitions – from prokaryotes to eukaryotes, from eukaryotes to vertebrates, and from vertebrates to mammals – are mirrored by linguistic advances within a mere 70,000 years. This progression is evidenced by archaeological findings from Blombos Cave and Klasies River Mouth Caves, the unique linguistic roles of click consonants and syllables, the pruning of cochlear hair cells, and the proposed intraventricular immune network. Together with the symbolic evolution from syllables to letters to bits, these elements outline a roadmap for the digital transformation of human language. Language stands as the pinnacle of four billion years of evolutionary refinement. It now calls upon us to transcend mere spinal reflexes and eusocial instincts, and to embrace truth-seeking as intelligent, reflective beings (see Table 2). A hypothetical allele favoring the joy of learning and discovery may be essential for this next step in human evolution.

Acknowledgments

We express our sincere gratitude to the organizers and participants of the research meetings hosted by the Institute of Electronics, Information and Communication Engineers (IEICE), the Information Processing Society of Japan (IPSJ), and the Japanese Society for Artificial Intelligence (JSAI). Between 2009 and 2023, particularly during the intensive periods of 2009–2014 and 2020–2022, we participated in more than 100 meetings, engaging in discussions that significantly accelerated the development of our hypotheses, as outlined in Table 6 (Hypotheses Development). These interactions were instrumental in shaping this work, particularly in refining the digital linguistic framework, the role of signal-to-noise ratios, and the reconsideration of Australopithecus africanus in language evolution.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Use of AI

During the preparation of this work, the author(s) used CharGPT and Grok in order to ameliorate written English and find appropriate research articles. After using these tools/services, the author(s) reviewed and edited the content as needed and take(s) full responsibility for the content of the publication.

Figure 1. Fossil mandible from Klasies River Mouth Caves (Morrissey et al., 2023), excavated from a ~100 ka layer but showing a fully developed mental protuberance. Morphology suggests it may be intrusive, possibly from a later burial.

Figure 2. X-ray images of mandibular and oral cavity morphology (Chiba and Kajiyama, 1941), illustrating how anterior oral cavity length and sublingual space affect vowel resonance.

Figure 3. Conceptual diagram of the intraventricular immune cell network for language processing, showing CSF B lymphocytes, CSF-contacting neurons, and microglial antigen-presenting cells (original work by the author): CN(Cochlear Nuclei), SOC (Superior Olivary Complex), SVT (Supralaryngeal Vocal Tract), Ret. Form. (Reticular Formation), CSF (Cerebrospinal Fluid)

Figure 4. Comparative skeletal display of Australopithecus africanus and Homo sapiens, photographed by the author at the Maropeng Centre, Cradle of Humankind, South Africa.

Table 1. Comparative attributes of three digital signal systems–syllables in language, RNA in life, and electronic bits in computation–highlighting parallels in structure, function, and error control.

Table 2. Evolutionary milestones in the development of digital signal systems in language, from early tool use to modern forward error correction.

Table 3. Biological evolution as a sequence of noise-reduction breakthroughs, with subsequent logical complexity growth after environmental recovery.

Table 4. Language evolution as a progression of signal types in low-noise environments, from syllables to letters to interactive bits.

Table 5. Weaknesses of sign-reflex mechanisms when applied to language processing in intelligence systems.

Table 6. Timeline tracing the conceptual development of the digital language evolution hypothesis, based on fieldwork, interdisciplinary research, and conference presentations.

References

1. Ambrose, S. (1998). Late Pleistocene human population bottlenecks, volcanic winter, and the differentiation of modern humans. J. Human Evol., 34, 623–651. https://doi.org/10.1006/jhev.1998.02192. Bardini, T. (2000). Bootstrapping: Douglas Engelbart, Coevolution, and the Origins of Personal Computing. Stanford University Press.

4. Bush, V. (1945). As We May Think. Atlantic Monthly, 176(July), 101–108.

5. Cann, R. L., Stoneking, M., & Wilson, A. C. (1987). Mitochondrial DNA and human evolution. Nature, 325(6099), 31–36.

6. Carr, N. (2011). The Shallows: What the Internet Is Doing to Our Brains. W. W. Norton & Co.

7. Chiba, T., & Kajiyama, M. (1941). Vowels. Tokyo: Kaiseikan Publishing Company.

8. Dart, R. A. (1959). Adventures with the Missing Link. Institute Press..

9. Deacon, H. J., & Deacon, J. (1999). Human beginnings in South Africa: uncovering the secrets of the Stone Age. Altamira Press.

10. Deagling, D. J. (2012). The Human Mandible and the Origins of Speech. J. Anthropology. https://doi.org/10.1155/2012/201502

11. Dogen’s Extensive Record (2010 English version, Original 1252). Translated by T. D. Leighton & S. Okumura. Wisdom Publications.

12. Eleanor, M. L. S., & Will, M. (2023). The revolution that still isn’t: The origins of behavioral complexity in Homo sapiens. J. Hum. Evol., 179, 103358. https://doi.org/10.1016/j.jhevol.2023.103358

13. Henscilwood, C. S., et al. (2001). An early bone tool industry from the Middle Stone Age at Blombos Cave, South Africa: implications for the origins of modern human behaviour, symbolism and language. J. Hum. Evol., 41, 631–678. https://doi.org/10.1006/jhev.2001.0515

14. ICOMOS, Advisory Body Evaluation (2024). Pleistocene Occupation Sites of South Africa (South Africa) No 1723.

15. Jerne, N. K. (1974). Toward a Network Theory of Immune System. Ann. Immunol. (Paris), 125C(1–2), 373–389.

16. Jerne, N. K. (1984). The Generative Grammar of the Immune System (The Nobel Lecture).

17. Kay, L. E. (2000). Who Wrote the Book of Life? A History of the Genetic Code. Stanford University Press.

18. Kuhl, P. K. (2004). Early language acquisition: cracking the speech code. Nature Reviews Neuroscience, 5, 831–843. https://doi.org/10.1038/nrn1533

19. Martin, R. D. (1990). Primate Origins and Evolution: A Phylogenetic Reconstruction. Chapman and Hall.

20. Maynard Smith, J., & Szathmáry, E. (1999). The Origins of Life: From the Birth of Life to the Origin of Language. Oxford University Press.

21. Morrissey, P., Mentzer, S. M., & Wurz, S. (2023). The stratigraphy and formation of Middle Stone Age deposits in Cave 1B, Klasies River Main site, South Africa, with implications for the context, age, and cultural association of the KRM 41815/SAM-AP 6222 human mandible. J. Hum. Evol., 183.

22. Owens, M., & Owens, D. (1984). Cry of the Kalahari.

23. Pepper, W. J., Braude, S. H., Lacey, E. A., & Sherman, P. W. (1991). Vocalization of the Naked Mole-Rat. In R. D. Alexander et al. (Eds.), The Biology of the Naked Mole-Rat. Princeton University Press.

24. Piaget, J. (1947). La psychologie de l'intelligence. Paris: Armand Colin.

25. Rightmire, G. P., & Deacon, H. J. (1991). Comparative studies of Late Pleistocene human remains from Klasies River Mouth, South Africa. J. Hum. Evol., 20, 131–156.

26. Singer, R., & Wymer, J. (1982). The Middle Stone Age at Klasies River Mouth in South Africa. University of Chicago Press.

27. Tanenbaum, A., & Wetherall, D. J. (2011). Computer Networks (5th ed.). Prentice Hall.

28. Tokumaru, K. (2018). Zero Error Requirement for Incoming Linguistic Information and Postscript Coding by Dogen (1200–1253). ВЕСТНИК КУРГАНСКОГО ГОСУДАРСТВЕННОГО УНИВЕРСИТЕТА, 2(49), 97–103.

29. Traill, A. (1997). Linguistic phonetic features for clicks. In R. K. Herbert (Ed.), African linguistics at the crossroads: papers from Kwaluseni (1st World Congress of African Linguistics, Swaziland, 18–22 July, 1994), 99–117.

30. Van Der Post, L. (1958). The Lost World of the Kalahari. Hogarth Press.

31. Vigh, B., et al. (2004). The system of cerebrospinal fluid-contacting neurons. Its supposed role in the nonsynaptic signal transmission of the brain. Histol. Histopathol, 19, 607–628. https://doi.org/10.14670/HH-19.607

32. Von Neumann, J. (1963). The General and Logical Theory of Automata. In Design of computers, theory of automata and numerical analysis (Vol. 5, A. H. Taub, Ed.). Pergamon Press.

33. Westphal, E. O. J. (1971). The click languages of Southern and Eastern Africa. In T. A. Sebeok (Ed.), Current trends in Linguistics (Vol. 7: Linguistics in Sub-Saharan Africa). Mouton.

34. Wilson, E. O. (2012). The Social Conquest of Earth. Liveright Publishing.